In the field of Eddy Current testing, defining reference defects is always a question that needs to be addressed and that draws attention. As simple as it might seem, it is a very important and critical subject and therefore is discussed in this article to clarify certain points. As an NDT operator or as a product / process supervisor, the clear objective is to sort out all products with surface defects – defects as observed visually. With this objective, the “logical” approach would be to set up the Eddy Current test parameters – gain, frequency, and filters in such a way as to ensure that the natural defects are detected / sorted accordingly.

Same natural defect for testing

This set of testing parameters would be used for all future testing by saving it in the library of the instrument. To confirm that the instrument is working well, the product operator would run the product with the natural defect under the same settings through the Eddy Current sensor. However, the results may not be consistent.

Problem

- The depth / severity of the natural defect is not known.

- The natural defect may change in dimension – reduce in depth due to repeated use or increase in depth due to growth of the crack.

Different natural defects for testing

The alternative approach would be to pick a fresh natural defect every time and use that to set up the Eddy Current test parameters for testing.

Problem

- We are confident that the test setting will pick up the natural defect, but, since we do not know the defect depth, the test settings could be different.

Result

In both cases, the result would be that repeatability as well as reliability are compromised.

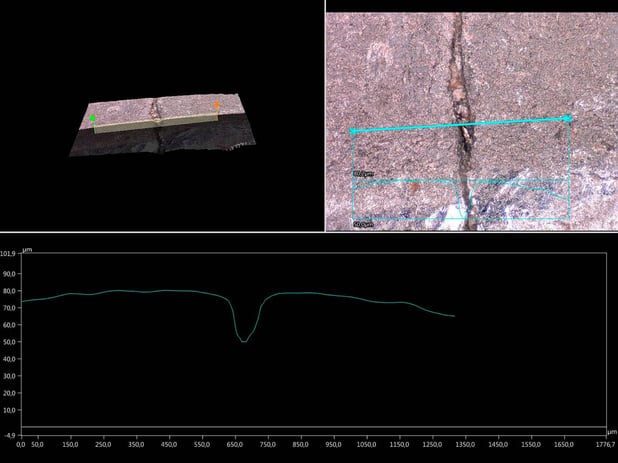

Analysis of both defects

The limitation of such a procedure using natural defects is the inconsistency of the set up. Natural defects vary, and the “severity” is defined based on visual inspection. That makes it both inconsistent and dependent on the judgement of the person setting up.

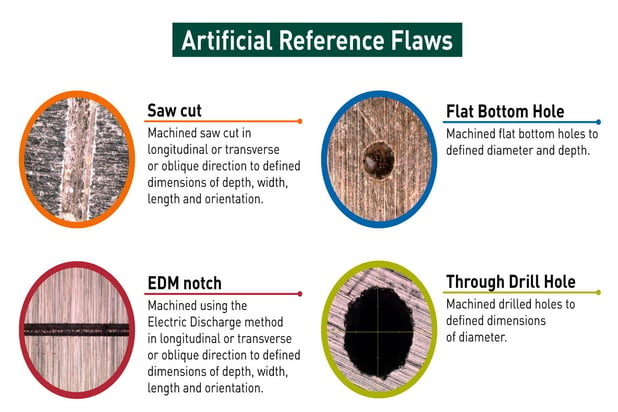

This could result in varying test settings. To ensure that we have a reliable and repeatable test setting, it is suggested that an artificial (machined) reference “defect” of known dimensions be used. Such a reference standard can be reproduced, even if the reference defect standard is lost or misplaced. Such a reference “defect” is called an Artificial Reference Indicator, ARI for short.

The question that often comes up is how one defines the dimensions and orientation of such an ARI. The definition can be based on or with reference to an ASTM or other acceptable standard OR by mutual agreement between the user and producer of the product. However, if the testing is being carried out for internal quality assurance and the objective is to sort “all” defects, the best is to carry out a study comparing naturally occurring defects and define a corresponding ARI.

We, FOERSTER, can carry out such a study and help you establish your ARI for each product, each application etc.

Remark – Some users simply use a fixed set of testing parameters. When instruments need to be changed due to upgrades or newer models or breakdown of existing instruments, this becomes a real issue. To ensure that the test results are repeatable and reliable, the test settings must be independent of the instrument used. Using an ARI would mitigate variations, since the reference is always to the amplitude and Signal to Noise ratio (SNR) of a defined ARI.